Pavel Rykov

July 26, 2023 ・ Kubernetes

Kubernetes Performance Tuning

Introduction

In an era where containerization and microservices have become the norms, Kubernetes has established itself as the go-to platform for managing these environments. However, a well-functioning Kubernetes cluster requires continuous attention and tuning to operate optimally. This publication aims to guide both beginners and experienced users through the essentials of Kubernetes performance tuning.

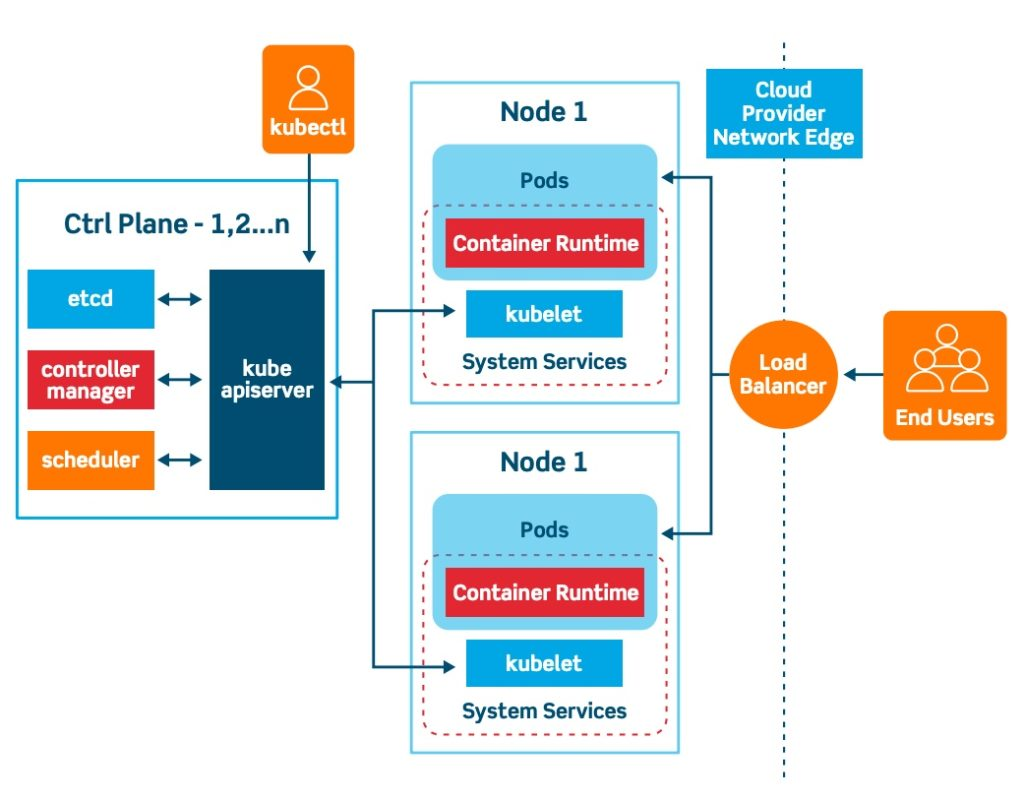

Source: Kubernetes Concepts and Architecture by Vamsi Chemitiganti.

Kubernetes, often referred to as K8s, is an open-source platform designed to automate deploying, scaling, and managing containerized applications. It was originally developed by Google, drawing from their experience of running billions of containers a week, and is now maintained by the Cloud Native Computing Foundation.

Kubernetes provides a framework to run distributed systems resiliently, handling and scheduling containers onto nodes within a computing environment. It takes care of the networking, storage, service discovery, and many more aspects that would otherwise require manual orchestration.

It's critical to have a good understanding of Kubernetes and its inner workings before diving into performance tuning. If you need a refresher, I recommend reading the official Kubernetes documentation and Kubernetes and containerization series of articles.

Understanding Resource Usage in Kubernetes

In Kubernetes, each Pod and its containers have configurable resource requests and limits. These settings influence how Kubernetes schedules Pods onto Nodes, as well as the Pod's performance and reliability.

Resource Requests: These are what the container is guaranteed to get. If a Node doesn't have enough resources to fulfill the request, the Pod will not be scheduled onto that Node. When a Pod is running, it is able to use additional resources up to the Node's capacity if they are not being used by other Pods.

Resource Limits: This is the maximum amount of a resource that a Pod can use. For CPU, this limit is by convention a share of CPU cycles that a Pod can use, while for memory, reaching this limit can cause a Pod to be evicted or killed if the Node is under memory pressure.

Resource allocation is done at the individual container level, but it's also useful to consider the collective resources of all the containers running in a Pod. Here's an example configuration for a Pod with these settings:

apiVersion: v1

kind: Pod

metadata:

name: my-application-pod

spec:

containers:

- name: my-application-container

image: my-application

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

- name: my-sidecar-container

image: my-sidecar

resources:

requests:

memory: "32Mi"

cpu: "100m"

limits:

memory: "64Mi"

cpu: "200m"

This Pod contains two containers, each with its own resource requests and limits. The Kubernetes scheduler considers the sum of the requests for all containers in the Pod when deciding on which Node to place the Pod.

Setting appropriate resource requests and limits is essential for both the performance and reliability of workloads in Kubernetes. Set them too low, and your application might not have the resources it needs to perform well. Set them too high, and you may waste resources or prevent other Pods from being scheduled onto the Node.

Monitoring tools (like Prometheus combined with Grafana, for example) can provide insights into actual resource usage and help set appropriate requests and limits.

Optimizing Kubernetes Resource Usage

Effective Resource Requests and Limits

Establishing appropriate resource requests and limits is crucial for Kubernetes performance optimization. The Kubernetes scheduler uses these values when deciding where to place Pods. The Kubernetes runtime also uses them to make decisions about throttling.

apiVersion: v1

kind: Pod

metadata:

name: complex-pod

spec:

containers:

- name: app-container

image: my-application

resources:

requests:

memory: "256Mi"

cpu: "500m"

limits:

memory: "512Mi"

cpu: "1000m"

- name: logging-container

image: my-logger

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

In this configuration, we have two containers inside the Pod. Each has its own resource configuration. The app-container is the main application, so it has higher resource requests and limits. The logging-container is a sidecar container that logs the application's output. It has lower resource requests and limits.

Monitoring actual resource usage over time, and adjusting these values accordingly, can help to strike the right balance between performance and resource efficiency.

Using Kubernetes Quality of Service (QoS) Classes

Kubernetes uses Quality of Service (QoS) classes to make decisions about scheduling and eviction. Every Pod is assigned one of three QoS classes:

- Guaranteed

apiVersion: v1

kind: Pod

metadata:

name: guaranteed-pod

spec:

containers:

- name: guaranteed-container

image: my-application

resources:

requests:

memory: "256Mi"

cpu: "500m"

limits:

memory: "256Mi"

cpu: "500m"

In this configuration, the requests equal the limits, so this Pod is guaranteed its resources and is the least likely to be killed if the Node runs out of resources.

- Burstable

apiVersion: v1

kind: Pod

metadata:

name: burstable-pod

spec:

containers:

- name: burstable-container

image: my-application

resources:

requests:

memory: "256Mi"

cpu: "500m"

limits:

memory: "512Mi"

cpu: "1000m"

In this configuration, the requests are lower than the limits. This Pod can use more resources when they are available but is more likely to be killed than Guaranteed Pods when the Node runs out of resources.

- BestEffort

apiVersion: v1

kind: Pod

metadata:

name: besteffort-pod

spec:

containers:

- name: besteffort-container

image: my-application

In this configuration, there are no requests or limits specified. This Pod uses resources when they are available and is the most likely to be killed when the Node runs out of resources.

A Pod's QoS class is determined based on its resource requests and limits. A Pod is Guaranteed if every container in the Pod has the same resource request and limit for each resource type (CPU and memory). It's Burstable if at least one container has a resource request, and it's BestEffort if there are no requests at all.

By adjusting your Pods' resource requests and limits, you can influence their QoS class and thus their scheduling and eviction priority.

Auto Scaling in Kubernetes

Kubernetes provides two forms of auto-scaling:

-

Horizontal Pod Autoscaler (HPA) scales the number of Pod replicas based on observed CPU utilization, or based on custom metrics4 provided by third-party extensions.

-

Vertical Pod Autoscaler (VPA) on the other hand, adjusts the CPU and memory requests of individual Pods, allowing them to use more or less resources as needed.

Both forms of autoscaling can help to optimize resource usage in Kubernetes, but they require careful tuning and monitoring to ensure they don't cause resource over-provisioning or under-provisioning.

To set up HPA based on CPU usage, you can use a configuration like the following:

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: my-application-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-application

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 50

In this configuration, Kubernetes will add replicas of your application (up to a maximum of 10) when the CPU utilization exceeds 50%.

VPA is a bit more complex because it modifies the resource requests of your Pods. Here's a simple example:

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: my-application-vpa

spec:

targetRef:

apiVersion: "apps/v1"

kind: Deployment

name: my-application

updatePolicy:

updateMode: "Auto"

In this configuration, the VPA will automatically adjust the CPU and memory requests of the Pods in the my-application Deployment, based on their usage.

Optimizing Storage and Networking Performance

Kubernetes provides a variety of storage and networking options, each with their own performance characteristics.

For storage, the choice of volume type and the configuration of the underlying storage system can have a big impact on performance. For example, SSDs generally offer better performance than HDDs, and network-attached storage may be slower than local disk.

For networking, different network plugins offer different capabilities and performance characteristics. The choice of network plugin and its configuration can affect network latency, bandwidth, and packet loss.

Let's take a closer look.

Storage

Kubernetes provides a few options to configure storage for your application. For instance, let's consider a Pod that requires persistent storage:

apiVersion: v1

kind: Pod

metadata:

name: storage-pod

spec:

containers:

- name: app-container

image: my-application

volumeMounts:

- mountPath: /data

name: my-volume

volumes:

- name: my-volume

persistentVolumeClaim:

claimName: my-pvc

In this configuration, the app-container uses a Persistent Volume Claim (PVC) called my-pvc for storing its data. The PVC would be linked to a Persistent Volume (PV) which is provisioned either manually or dynamically through a Storage Class. The performance characteristics of the underlying storage backend and the configuration of the PV can affect the application's performance.

Persistent Volume Types

Kubernetes provides several types of Persistent Volumes (PVs) that cater to different storage needs and infrastructure:

-

GCEPersistentDisk: This is a Google Compute Engine Persistent Disk. It's a good option if you're running your cluster on Google Cloud.

-

AWSElasticBlockStore: This is an Amazon Web Services (AWS) EBS volume. It's a good option if you're running your cluster on AWS.

-

AzureDisk/AzureFile: These are storage options for clusters running on Microsoft Azure.

-

NFS: This is a Network File System volume. It can be used with any cloud provider or on-premises.

-

iSCSI: This is an Internet Small Computer System Interface volume. It can be used with any cloud provider or on-premises.

-

Local: This represents a mounted local storage device such as a disk, partition or directory.

Each PV type has its own performance characteristics and should be chosen based on your application needs and your infrastructure, you can explore our Ceph example in Installing Ceph as a Distributed Storage Solution and Connecting it to Kubernetes article and official Kubernetes documentation for more details.

Configuring Storage Size

When creating a Persistent Volume Claim (PVC), you can specify the storage size that you need:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

In this example, we're requesting a PVC with 10 GiB of storage. If a suitable PV exists, the PVC will bind to it. If no suitable PV exists and dynamic provisioning is configured, a new PV will be provisioned to satisfy the claim.

It's important to request an appropriate amount of storage for your application. Requesting too much can lead to wasted resources, while requesting too little can lead to application failures.

Configuring Storage Performance

Many types of PVs allow you to configure the storage class to fine-tune performance. For example, on Google Cloud, you can configure the storage class of a GCEPersistentDisk:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: fast-storage

provisioner: kubernetes.io/gce-pd

parameters:

type: pd-ssd

In this example, we're defining a StorageClass that uses SSDs, which generally provide faster I/O than standard hard drives. This would be a good choice for I/O-intensive applications.

In summary, Kubernetes provides a wide array of options for configuring storage, and the right choices can significantly improve your application performance.

Network

The design of Kubernetes networking is unique in that it assigns each Pod a unique IP address and all containers within a Pod share a network namespace, including the IP address and network ports. Containers within a Pod can communicate with each other using localhost. Let's look into more detail.

Pod-to-Pod Communications

Pods can communicate with other Pods regardless of which Node they reside on without NAT. This is made possible by overlay networks or Network Plugins such as Flannel, Calico, Cilium, Weave, and others. The choice of network plugin can have a significant impact on network performance.

Services and Ingress

Services are the abstraction that allow Pods to receive network traffic. There are several types of Services, each with different performance implications:

-

ClusterIP is the default Service type. It provides a stable internal IP in the cluster to send traffic to a Pod.

-

NodePort is a type of Service that listens on a port on all Nodes in the cluster and forwards traffic to a Pod.

-

LoadBalancer is a Service that leverages cloud providers' load balancers to forward external traffic to a Service in the cluster.

Ingress is another way to handle incoming traffic, particularly HTTP/HTTPS traffic. It can provide load balancing, SSL termination, and name-based virtual hosting, more details you may find in Kubernetes networking series of articles.

Network Policies

Kubernetes Network Policies are like firewall rules for your cluster. They define how Pods communicate with each other and with other network endpoints.

Here's a simple example of a Network Policy:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: internal

spec:

podSelector:

matchLabels:

role: db

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector:

matchLabels:

role: frontend

egress:

- to:

- podSelector:

matchLabels:

role: log

This Network Policy allows traffic from Pods with the frontend label to Pods with the db label and only allows outbound traffic to Pods with the log label.

Network Policies can help to ensure the network security of your application and can influence its performance, especially in large, complex systems.

Performance Troubleshooting in Kubernetes

In the world of complex systems, performance issues can arise from a myriad of sources. When it comes to Kubernetes, understanding how to diagnose and troubleshoot these issues is a critical skill for any DevOps professional. Let's dive into some essential techniques.

Understanding Kubernetes Events

Events in Kubernetes are a series of actions that occur in the cluster, which get recorded. They offer a chronological account of what has transpired within the system.

Kubernetes events are namespaced, meaning you can view events tied to specific namespaces or get an overview of all events happening across the entire cluster. The standard way to view events is by using the kubectl command.

For instance, you can view all events in the default namespace as follows:

kubectl get events --namespace default

You can also view events for a specific object. For example, to view events for a Pod named my-pod, you can use:

kubectl describe pod my-pod

This command will display information about the Pod, including a list of events related to it.

When it comes to diagnosing performance-related issues, we're primarily interested in events such as:

-

Failed Scheduling: This event indicates that the Kubernetes scheduler could not allocate the Pod to a Node, perhaps due to insufficient resources (CPU, memory, etc.). This could be a signal of resource contention in your cluster.

-

Unhealthy: This event signifies that the kubelet has started failing the liveness probe for a Pod. Unhealthy Pods can lead to performance issues.

-

Back-off: This event shows that a Pod is repeatedly crashing and Kubernetes is delaying its restart. This can indicate that there's a problem with your application that's impacting its performance.

-

SystemOOM: This event indicates that a Node is running out of memory. This could be a sign that your applications are using too much memory, or that your Nodes are undersized.

The kubectl get events command outputs a lot of information, and you might want to filter events based on certain criteria. For instance, to view Failed Scheduling events, you could use a command like this:

kubectl get events --field-selector reason=FailedScheduling

In conclusion, Kubernetes events can provide a treasure trove of information for diagnosing performance issues. By understanding and analyzing these events, you can identify and address the root causes of these issues.

Monitoring Metrics with Prometheus

Prometheus is a powerful open-source monitoring and alerting toolkit that can collect time-series data. Integration of Prometheus with Kubernetes provides insightful metrics about the usage of the system, helping you to maintain the system's health and performance.

Here, we'll outline a basic setup process for deploying Prometheus in a Kubernetes cluster using the Kubernetes Prometheus Operator.

Deploy the Prometheus Operator

Prometheus Operator simplifies the Prometheus setup on Kubernetes using custom resources. You can deploy it via Helm:

# Add the Helm repo

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

# Update Helm repos

helm repo update

# Install the chart

helm install [RELEASE_NAME] prometheus-community/kube-prometheus-stack

This command installs the Prometheus Operator and a range of other useful tools, including Prometheus, Alertmanager, Grafana, and kube-state-metrics.

Monitor Your Pods

With the Prometheus Operator in place, you can start monitoring your Pods using ServiceMonitor resources. These are custom resources, not built into Kubernetes, that define how a service should be monitored.

Suppose you have a service my-service that exposes Prometheus metrics on port 8080 at the /metrics endpoint. Here's how you can set up a ServiceMonitor to scrape these metrics:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: my-service-monitor

labels:

release: [RELEASE_NAME]

spec:

selector:

matchLabels:

app: my-service

endpoints:

- port: metrics

path: /metrics

This ServiceMonitor tells Prometheus to fetch metrics from the /metrics path at port 8080 of any Pod with the app: my-service label. Prometheus automatically discovers the ServiceMonitor and configures itself to start fetching metrics.

Access Prometheus Dashboard

You can access the Prometheus dashboard to see the scraped metrics. If you're running Kubernetes on your local machine (with Minikube, for example), you can use port-forwarding:

kubectl port-forward service/[RELEASE_NAME]-kube-prometheus-stack-prometheus 9090

Then, navigate to localhost:9090 on your web browser.

From the dashboard, you can run queries and create custom alerts.

Note that while Prometheus is excellent for storing time-series data and running simple queries, you'd typically use Grafana for more complex visualizations, which is also installed as part of the kube-prometheus-stack Helm chart.

Profiling Applications

Sometimes, the issue might not be with the Kubernetes infrastructure, but with the application running on it. Profiling tools can provide insights into how your application is performing.

Most programming languages have their profiling tools that can monitor function execution time, memory allocation, database queries, and other performance-related data. For example, pprof for Go, cProfile for Python, or JProfiler for Java.

Logs Examination

Logs are often the first place to look when troubleshooting performance issues. In Kubernetes, you can access the logs of a Pod using the kubectl logs command:

kubectl logs my-pod-name

This will print out the logs of the Pod to your console. If your Pod has more than one container, you can specify which container's logs you want to access with the -c/--container option.

For more advanced log aggregation and search, you can use a centralized logging solution like Elasticsearch, Logstash, and Kibana (the ELK Stack), or Loki for Grafana.

Conclusion

Performance tuning in Kubernetes is both a science and an art, consisting of numerous aspects ranging from resource management, network and storage optimizations, to proficient troubleshooting, and utilization of various monitoring and performance testing tools. With the growing adoption of Kubernetes in production environments, understanding these aspects becomes crucial to ensure efficient application delivery.

We discussed several methodologies for optimizing Kubernetes' performance:

-

Resource management techniques to guarantee the Pods have the necessary CPU and memory to perform optimally.

-

Network and storage tuning techniques to ensure speedy communication between the Pods and quick data access, both critical for high-performing applications.

-

Troubleshooting methodologies, including analyzing Kubernetes events, setting up monitoring using Prometheus, and interpreting application logs.

We also touched upon the need for continuous performance testing and the use of open-source load testing tools like Locust, JMeter, and Siege.

By implementing these strategies, we can create a high-performing and efficient Kubernetes environment. It's important to remember that performance tuning is an ongoing process that doesn't stop once your applications are up and running. Always monitor your applications and make adjustments as needed to meet changing requirements and conditions.

While this guide provides a comprehensive overview of the main aspects of Kubernetes performance tuning, the world of Kubernetes is vast, with many more resources and tools available to help you optimize your clusters and applications. As always, continued learning and experimentation are the keys to mastering Kubernetes performance tuning.

Thank you for joining us in this exploration of Kubernetes Performance Tuning. We hope that you found it informative and that it will serve as a valuable resource in your Kubernetes journey.

- Kubernetes

- Infrastructure